This is the JAWS PANKRATION 2024 Organizing Committee.

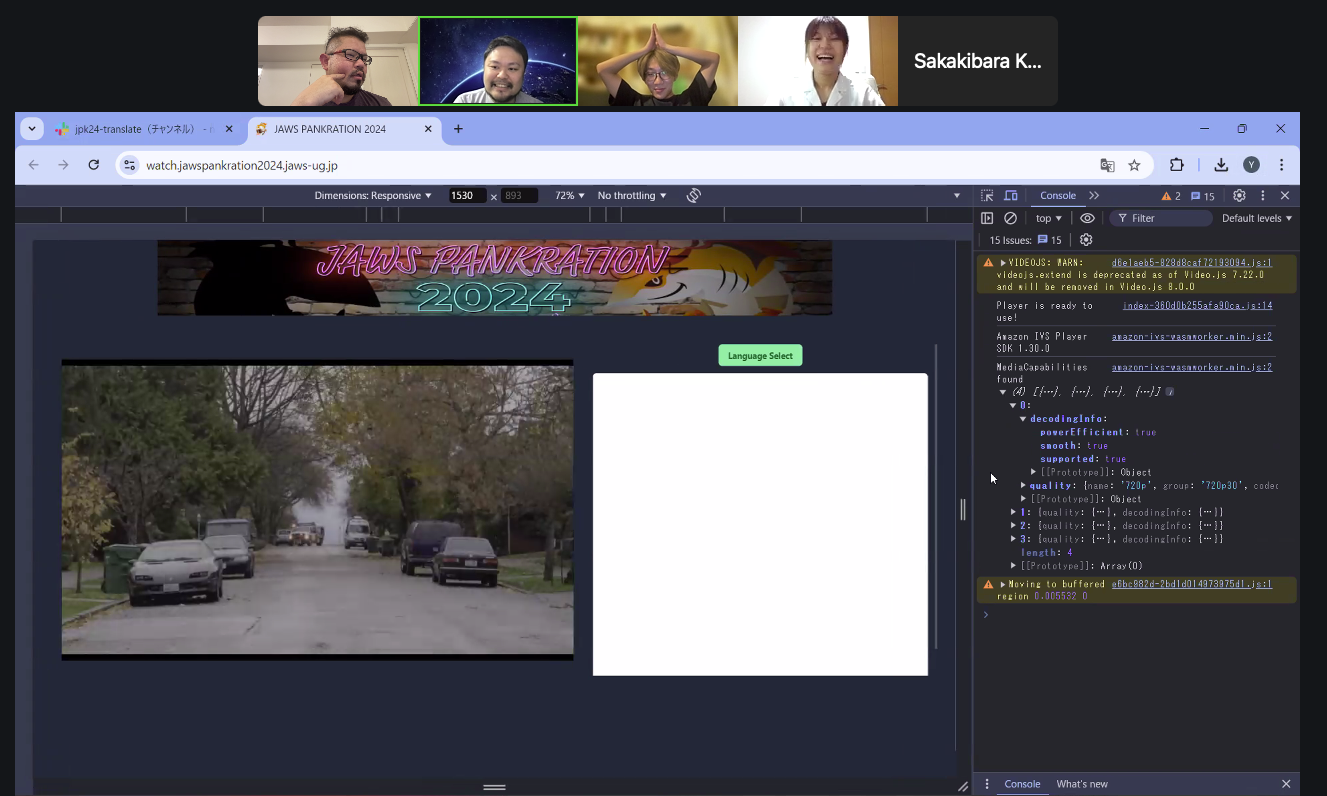

Here’s an overview of the simultaneous translation and streaming environment for this event.

.png?w=2000&h=1133)

In JAWS PANKRATION 2021, held three years ago, we used a translation device called Pocketalk, but a key feature of JAWS PANKRATION 2024 is that we developed our own automatic translation system to achieve a device-free environment.

To describe the simultaneous translation process more concretely:

This two-step process was the subject of many discussions. We considered using the Whisper model, a language model, with OBS for transcription, but during testing, we found that its performance and operation were unstable.

Ultimately, we decided to use Speech Recognition.

Speech Recognition itself can be called with JavaScript. The audio data obtained through Speech Recognition is divided into chunks by phrase. Triggered by this chunking, the data is sent to a backend Amazon API Gateway, which then calls an AWS Lambda function. This Lambda function, with the transcribed text as a parameter, sends a translation request to Amazon Translate. The translated text is saved in Amazon DynamoDB for later summarization, and also sent to the client side using Amazon IVS Chat Room, where translated subtitles are displayed in the client's browser.

The number of concurrent viewers (updated every minute) is tracked by calling an AWS Lambda function via Amazon EventBridge, retrieving the viewer count from the Amazon IVS channel through an API, and storing it in Amazon DynamoDB. This data is also sent to the front end using Amazon IVS Timed Metadata.

As in the previous event, we manage speaker information using Google Sheets, which is displayed on a web page for operators through the Google Sheets API. The number of concurrent viewers is also shown on the operator's web page. The operator’s web page is opened on Amazon Workspaces, captured by OBS Studio, and broadcast as a video stream on Amazon IVS Low-Latency Stream Channel. The frontend on the viewer's side can then load this stream using the SDK, allowing real-time information such as subtitles and concurrent viewer counts to be displayed.

All viewer endpoints, such as the streaming site and the operator’s web page, are hosted on AWS Amplify. These are implemented using frontend technologies like JavaScript, React, and Next.js.

This simultaneous translation and streaming environment was implemented by committee members Matsui, Maehara, Sakakibara, and Koitabashi.

Before the implementation, we received valuable advisory support from AWS's Todd Sharp and Hao Chen in the design consultation. We also received support from the Amazon IVS team in terms of usage and cost.

Here is the session by Todd Sharp from the Amazon IVS team, who are also sponsors of this event:

https://jawspankration2024.jaws-ug.jp/ja/timetable/TT-61/

Look forward to diving deep into Amazon IVS in this session!

©JAWS-UG (AWS User Group - Japan). All rights reserved.